I was thinking that too!

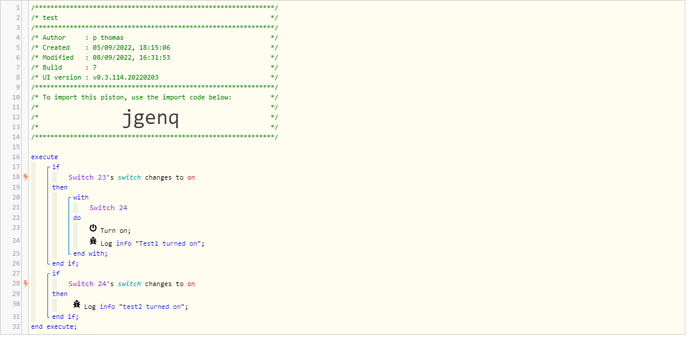

Here is a sample showing the issue. Both switches were off, the first was turned on. The second also turned on, but failed to display the message.

8/09/2022, 16:32:44 +237ms

+2ms ╔Received event [Test2].switch = on with a delay of 46ms, canQueue: false, calledMyself: true

+4ms ║RunTime initialize > 4 LockT > 0ms > r9T > 1ms > pistonT > 0ms (first state access 3 2 2)

+6ms ║Runtime (6638 bytes) initialized in 1ms (v0.3.114.20220822_HE)

+7ms ║╔Execution stage started

+13ms ║║Condition

#2 evaluated false (4ms)

+15ms ║║Condition group

#1 evaluated false (condition changed) (6ms)

+18ms ║║Comparison (enum) on changes_to (string) on = false (0ms)

+19ms ║║Condition

#7 evaluated false (2ms)

+19ms ║║Condition group

#6 evaluated false (condition did not change) (3ms)

+23ms ║╚Execution stage complete. (15ms)

+25ms ╚Event processed successfully (24ms)

08/09/2022, 16:32:44 +205ms

+2ms ╔Received event [Test2].switch = on with a delay of 14ms, canQueue: true, calledMyself: false

+14ms ╚Event queued (12ms)

08/09/2022, 16:32:44 +164ms

+2ms ╔Received event [Test1].switch = on with a delay of 15ms, canQueue: true, calledMyself: false

+8ms ║RunTime initialize > 7 LockT > 1ms > r9T > 1ms > pistonT > 0ms (first state access 5 3 4)

+10ms ║Runtime (6628 bytes) initialized in 1ms (v0.3.114.20220822_HE)

+11ms ║╔Execution stage started

+16ms ║║Comparison (enum) on changes_to (string) on = true (0ms)

+17ms ║║Condition

#2 evaluated true (4ms)

+19ms ║║Condition group

#1 evaluated true (condition changed) (5ms)

+29ms ║║Executed physical command [Test2].on() (7ms)

+34ms ║║Test1 turned on

+36ms ║║Executed virtual command [Test2].log (2ms)

+41ms ║║Condition

#7 evaluated false (3ms)

+41ms ║║Condition group

#6 evaluated false (condition did not change) (4ms)

+45ms ║╚Execution stage complete. (34ms)

+48ms ╚Event processed successfully (46ms)