I started here on the IPCAMTALK forums with blueiris: https://ipcamtalk.com/threads/script-to-detect-colour-make-and-model-of-car-from-ip-camera.23573/

“This uses Sighthound’s Cloud API which uses machine learning to determine the vehicle in the image. This API is free for the first 5000 calls, which my alerts fit well within. You can sign up on their website.

The script will check the previous vehicle detected and quit if it’s the same. This is because Alert images from Blueiris may be set off by other factors and you may have a car parked in your driveway…you don’t want to be alerted every minute that there’s a White Ford F Series.”

Got that python script working with some additional information (confidence percentage). Then tied it into webcore with a URL request. Now when triggered I get an announcement or text of what pulled into my driveway.

edit: Advanced this to include processing the images to determine if there is either a vehicle, person or face detectable in the image. I keep a count of these and also pass the details of the last vehicle detected. The python script itself does checking to see if the last vehicle processed matches the current one. If so we clear it out. The webcore piston tracks the last vehicle per camera. This has dramatically increased the reliability of the cameras motion detection cutting down on rain/snow/shadows triggering events.

Also to note I have NEVER coded in python before…

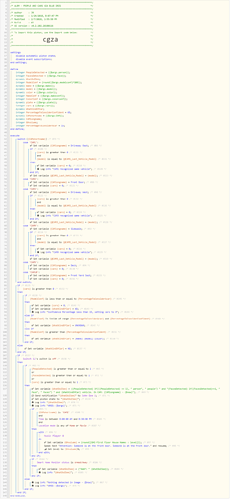

python script:

import base64

import http.client

import json

import os

import time

import ssl

import smtplib

import glob

import pickle

import datetime

import requests

import sys

import logging

##

##SETUP LOGGING FUNCTIONS

##

logger = logging.getLogger('bi-sighthound')

hdlr = logging.FileHandler('bi-sighthound.log')

formatter = logging.Formatter('%(asctime)s %(levelname)s %(message)s')

hdlr.setFormatter(formatter)

logger.addHandler(hdlr)

logger.setLevel(logging.INFO)

CAM = sys.argv[1]

#CHANGE THESE FOR YOUR ENVIRONMENT

sighthoundapi = '{YOUR API KEY}'

homeassistanturl = "https://graph-na02-useast1.api.smartthings.com/ap....{YOUR PISTON URL}"

filelocation = "C:\\BI-Sighthound\\" + (CAM) + ".sighthound.jpg"

# Reset Variables

person = 0

face = 0

cars = 0

modelconf = 0

make = 0

model = 0

color = 0

makeconf = 0

colorconf = 0

plate = 0

licenseplate = ''

##

## CREATE data file if it doesn't exist so we can compare if this is a duplicate vehicle on subsequent runs

##

if not os.path.exists('data.pickle'):

new_data = {'make' : "null", 'model' : "null", 'color' : "null"}

with open('data.pickle', 'wb') as f:

pickle.dump(new_data, f, pickle.HIGHEST_PROTOCOL)

print("Created file...")

# Uncomment the below if you store Hi-Res Alert images, and prefer to use those. This will search the latest jpeg to use.

#list_of_files = glob.glob('C:\BlueIris\Alerts\*.jpg')

#latest_file = max(list_of_files, key=os.path.getctime)

#print(latest_file)

#filelocation = latest_file

# CREATE SIGHTHOUND REQUEST

headers = {"Content-type": "application/json", "X-Access-Token": sighthoundapi}

image_data = base64.b64encode(open(filelocation, 'rb').read())

params = json.dumps({"image": image_data.decode('ascii')})

conn = http.client.HTTPSConnection("dev.sighthoundapi.com", context=ssl.SSLContext(ssl.PROTOCOL_TLSv1))

conn.request("POST", "/v1/detections?type=person,face", params, headers)

# Parse the response and print the make and model for each vehicle found

response = conn.getresponse()

results = json.loads(response.read())

for obj in results["objects"]:

if obj["type"] == "person":

person = person + 1

print("Number of People Detected: %s " % (person))

for obj in results["objects"]:

if obj["type"] == "face":

face = face + 1

print("Number of Faces Detected: %s " % (face))

##

## CHECK TO SEE IF THERE IS A CAR AND THE MAKE AND MODEL

##

headers = {"Content-type": "application/json", "X-Access-Token": sighthoundapi}

image_data = base64.b64encode(open(filelocation, 'rb').read())

params = json.dumps({"image": image_data.decode('ascii')})

conn = http.client.HTTPSConnection("dev.sighthoundapi.com", context=ssl.SSLContext(ssl.PROTOCOL_TLSv1))

conn.request("POST", "/v1/recognition?objectType=vehicle,licenseplate", params, headers)

##

## Parse the response and print the make and model for each vehicle found

## This really only gets the data from the last vehicle object. but does count them

##

response = conn.getresponse()

results = json.loads(response.read())

for obj in results["objects"]:

if obj["objectType"] == "vehicle":

make = obj["vehicleAnnotation"]["attributes"]["system"]["make"]["name"]

model = obj["vehicleAnnotation"]["attributes"]["system"]["model"]["name"]

color = obj["vehicleAnnotation"]["attributes"]["system"]["color"]["name"]

makeconf = obj["vehicleAnnotation"]["attributes"]["system"]["make"]["confidence"]

modelconf = obj["vehicleAnnotation"]["attributes"]["system"]["model"]["confidence"]

colorconf = obj["vehicleAnnotation"]["attributes"]["system"]["color"]["confidence"]

cars = cars + 1

if licenseplate in obj["vehicleAnnotation"]:

plate = obj["vehicleAnnotation"]["licenseplate"]["attributes"]["system"]["string"]["name"]

print("Detected: %s %s %s %s" % (color, make, model, plate))

current_data = {

'make' : (make),

'model' : (model),

'color' : (color)

}

##

## Check to see if this is the same vehicle as the last run (multiple cameras)

##

with open('data.pickle', 'rb') as f:

previous_data = pickle.load(f)

if current_data == previous_data:

print('Data is the same!')

cars = 0

with open('data.pickle', 'wb') as f:

pickle.dump(current_data, f, pickle.HIGHEST_PROTOCOL)

print("Detected %s People %s Faces and %s Cars" % (person, face, cars))

##

## SEND URL

##

url = homeassistanturl

payload = json.dumps({

"person" : (person),

"face" : (face),

"cars" : (cars),

"make" : (make),

"model" : (model),

"color" : (color),

"makeconf" : (makeconf),

"modelconf" : (modelconf),

"colorconf" : (colorconf),

"plate" : (plate),

"CAM" : (CAM)

})

headers = {

"content-type": "application/json"

}

response = requests.request("POST", url, data=payload, headers=headers)

print(response.status_code)

print(response.text)

##

## Write to log file so we can see what's being called if needed

##

logger.info("%s - People:%s Faces:%s Cars:%s Make:%s Model:%s Color:%s Conf:%s - Status Code:%s Response:%s" % (CAM, person, face, cars, make, model, color, modelconf, response.status_code, response.text))

#logger.info("Status Code:%s Response:%s" % (response.status_code, response.text))

##

## Remove log file if older than 60 days (let's keep things cleaned up)

##

now = time.time()

if os.stat('bi-sighthound.log').st_mtime < (now - (60 * 86400)):

hdlr.close()

os.remove('bi-sighthound.log')