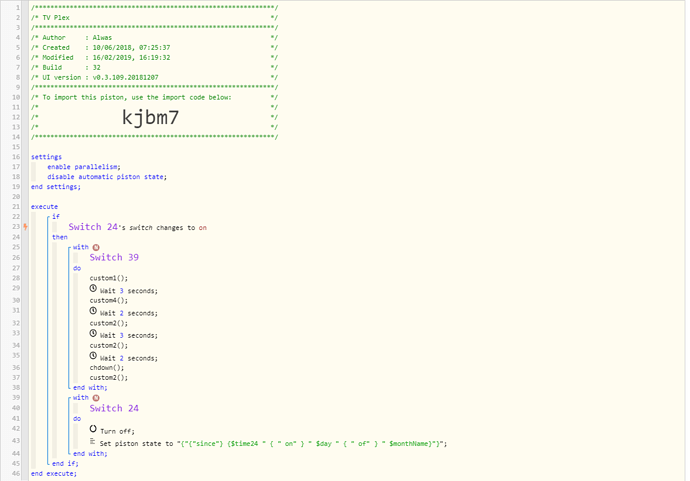

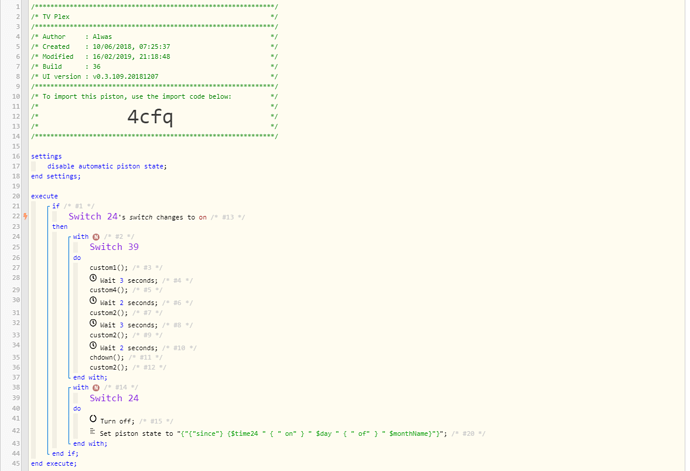

This is really annoying. I have some pistons triggered by virtual switches, set to turn on various Apps on my TV like Plex. A Raspberry Pi sends individual IR commands to my Samsung TV, controlled by WebCoRE. They’ve been fine for a year, snappy, precise, but now a 3 second wait can be 3 seconds or 10 minutes. I can’t narrow down what the problem is. Everything leads me to think it’s WebCoRE, maybe I have too many pistons, 110, maybe i need to create another instance of WebCoRE?

For example my piston is set to turn off a virtual switch at the end of the piston, it’s been 20 minutes and the virtual switch is still on!

I read somewhere turning on Parallelism would help my situation but is hasn’t.

16/02/2019, 19:37:24 +579ms

+0ms ╔Received event [Home].time/recovery = 1550342244578 with a delay of 0ms

+908ms ║RunTime Analysis CS > 352ms > PS > 504ms > PE > 52ms > CE

+912ms ║Runtime (39510 bytes) successfully initialized in 504ms (v0.3.109.20181207) (910ms)

+913ms ║╔Execution stage started

+924ms ║╚Execution stage complete. (10ms)

+938ms ╚Event processed successfully (937ms)

16/02/2019, 19:37:02 +304ms

+1ms ╔Received event [Home].execute = recovery with a delay of 226ms

+124ms ║RunTime Analysis CS > 16ms > PS > 57ms > PE > 50ms > CE

+126ms ║Runtime (39496 bytes) successfully initialized in 57ms (v0.3.109.20181207) (125ms)

+127ms ║╔Execution stage started

+140ms ║║Cancelling condition #13’s schedules…

+142ms ║║Condition #13 evaluated false (7ms)

+145ms ║║Cancelling condition #1’s schedules…

+146ms ║║Condition group #1 evaluated false (state changed) (13ms)

+171ms ║╚Execution stage complete. (44ms)

+182ms ╚Event processed successfully (182ms)

16/02/2019, 19:37:02 +270ms

+1ms ╔Received event [Home].execute = recovery with a delay of 216ms

+125ms ║RunTime Analysis CS > 17ms > PS > 60ms > PE > 48ms > CE

+128ms ║Runtime (39496 bytes) successfully initialized in 60ms (v0.3.109.20181207) (126ms)

+129ms ║╔Execution stage started

+143ms ║║Cancelling condition #13’s schedules…

+144ms ║║Condition #13 evaluated false (9ms)

+145ms ║║Cancelling condition #1’s schedules…

+146ms ║║Condition group #1 evaluated false (state changed) (11ms)

+172ms ║╚Execution stage complete. (43ms)

+184ms ╚Event processed successfully (184ms)

16/02/2019, 19:36:14 +629ms

+1ms ╔Received event [TV Plex].switch = on with a delay of 394ms

+238ms ║RunTime Analysis CS > 19ms > PS > 158ms > PE > 62ms > CE

+242ms ║Runtime (39497 bytes) successfully initialized in 158ms (v0.3.109.20181207) (240ms)

+244ms ║╔Execution stage started

+258ms ║║Comparison (enum) on changes_to (string) on = true (2ms)

+261ms ║║Cancelling condition #13’s schedules…

+263ms ║║Condition #13 evaluated true (11ms)

+265ms ║║Cancelling condition #1’s schedules…

+267ms ║║Condition group #1 evaluated true (state changed) (15ms)

+272ms ║║Cancelling statement #2’s schedules…

+550ms ║║Executed physical command [TV].custom1() (272ms)

+551ms ║║Executed [TV].custom1 (274ms)

+556ms ║║Executed virtual command [TV].wait (1ms)

+557ms ║║Waiting for 3000ms

+3628ms ║║Executed physical command [TV].custom4() (68ms)

+3629ms ║║Executed [TV].custom4 (70ms)

+3634ms ║║Executed virtual command [TV].wait (0ms)

+3635ms ║║Waiting for 2000ms

+5701ms ║║Executed physical command [TV].custom2() (63ms)

+5702ms ║║Executed [TV].custom2 (65ms)

+5707ms ║║Executed virtual command [TV].wait (0ms)

+5708ms ║║Waiting for 3000ms

+8773ms ║║Executed physical command [TV].custom2() (62ms)

+8775ms ║║Executed [TV].custom2 (64ms)

+8779ms ║║Executed virtual command [TV].wait (1ms)

+8781ms ║║Requesting a wake up for Sat, Feb 16 2019 @ 7:36:25 PM CET (in 2.0s)

+8796ms ║╚Execution stage complete. (8552ms)

+8810ms ║Setting up scheduled job for Sat, Feb 16 2019 @ 7:36:25 PM CET (in 1.973s)

+8820ms ╚Event processed successfully (8821ms)