This is the perfect approach with budding technology (as well as other things).

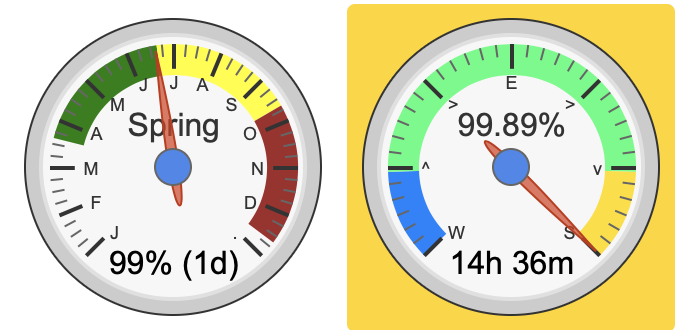

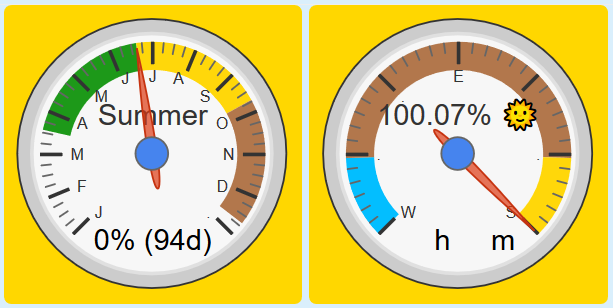

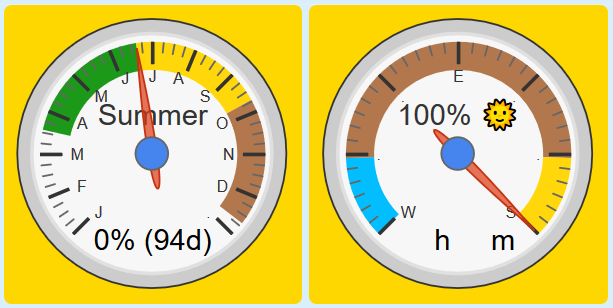

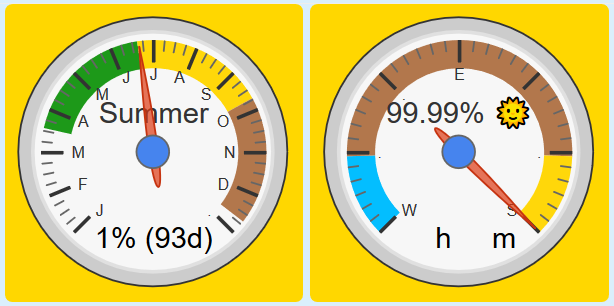

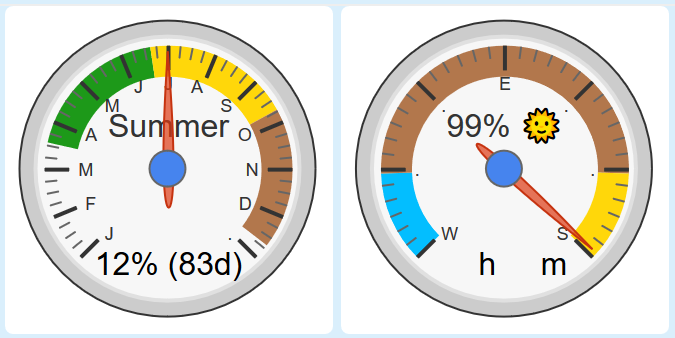

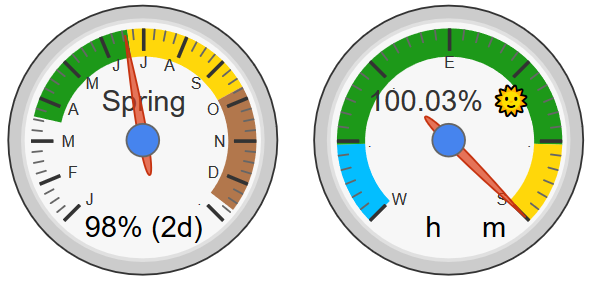

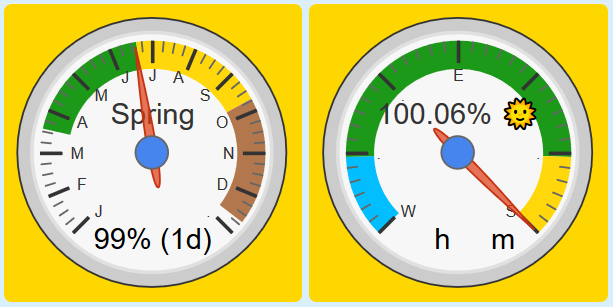

For the record, for anyone jumping halfway into this thread, we’ve been talking about a literal 1 or 2 second variance out of an entire year. (that 0.01% estimate was not an exaggeration)

IE: We are nitpicking to the nth degree here, LOL

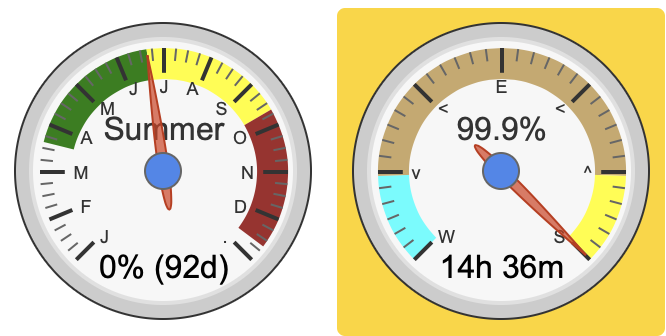

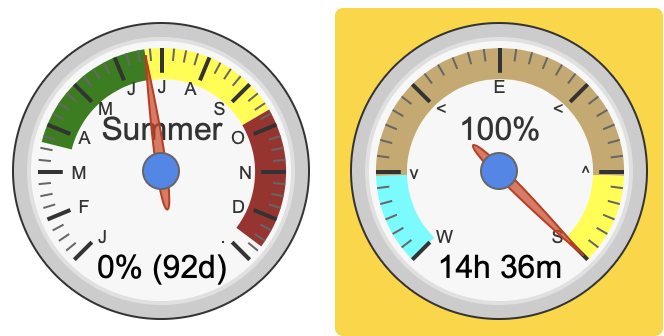

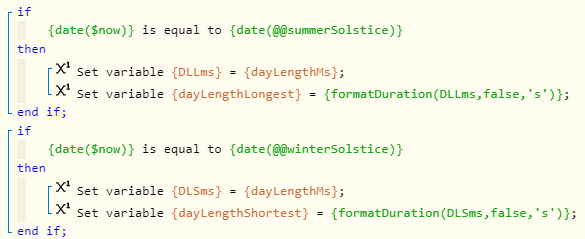

That being said, I am all about precision. I originally wanted the same as you, @fieldsjm. I wanted each cycle to start and end with exactly 0 & 100. I even went so far as to create my own personal API listing the next ten years for my location.

The irony is, it worked great, but the timeanddate station (where I originally grabbed the data) was a few miles away… so I had to shift to bring “T&D time” over to “TWC time”.

(since TWC is where we are getting the daily data from)

So the question I have for myself now is, should I take the next ten years of T&D data, and subtract ten seconds from each Summer Solstice?

(ugg, I don’t like a test that takes a year to get a single sample… and calibrating one location off of another is another can of worms)

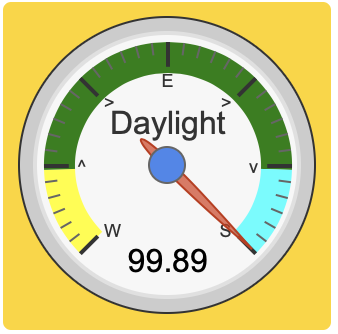

Here is an interesting perspective on this you may enjoy.

I do not believe the two seconds variances I saw in my 15 year skim was due to any astronomical shift. (it bounced around inside the 2 sec window) Now honestly, I have not done the math, but my guess would be it may take a whole lifetime or longer for the Solstice dayLength to really shift a second.

No. What I think is happening is this:

- We are tracking sunrise in seconds (not ms)

- We are tracking sunset in seconds (not ms)

- Both of those numbers are being rounded to the nearest second

- Then we subtract sunrise from sunset

- Which means the final answer may be off as much as 998 milliseconds (499 ms X 2)

Now if we apply that over a ten year period, then the results will always be within the range of -0.998 thru 0.998. (approx two seconds)

Now that I think about it, if I were being really anal, I would do my math based on the invisible zero between the two extremes. (because that is where the sun truly is)

I don’t want to sound like an old fuddy-duddy, but something is always lost when going from analog to digital. (as demonstrated in the first post here)

TL;DR:

I suspect that the +/- 1 second possibility are only due to us not seeing sunrise & set in milliseconds.

I’d love to see this built in.

(as well as historical data, if we are making a list, LOL)

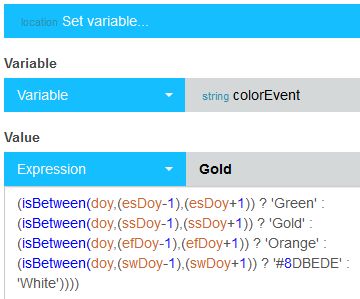

hot time of the year, and yellow during the

hot time of the year, and yellow during the  cold season… but hey, you do you.

cold season… but hey, you do you.